Blog

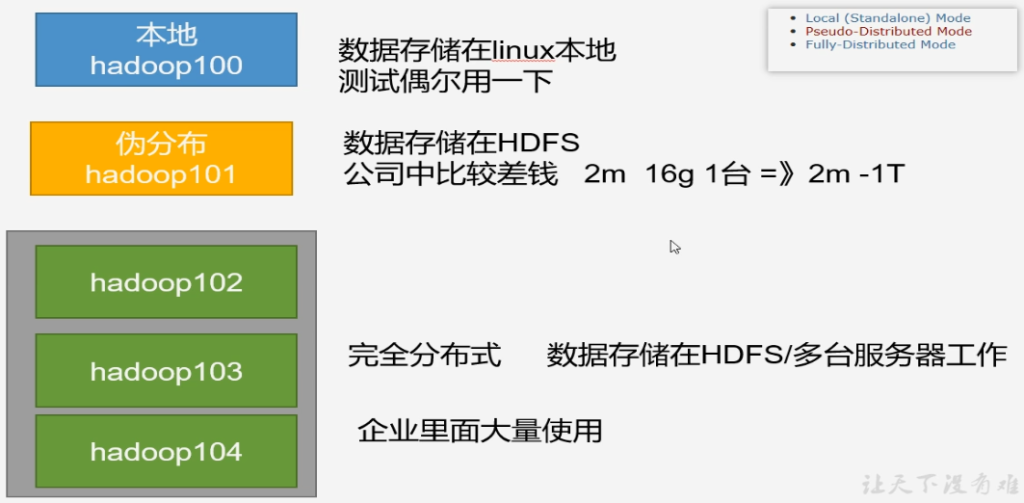

hadoop-03 运行模式

- 本地运行模式

- 数据存储在linux本地

- 伪分布式模式

- 数据存储在hdfs

- 完全分布式模式

- 数据存储在hdfs

- 多台服务器工作

本地运行模式

准备

- ~/wcinput/words.txt

hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount ~/wcinput/ ~/wcoutput完全分布式

步骤:

- 准备3台客户机

- 关闭防火墙

- 静态IP

- 主机名称

- 安装JDK

- 配置环境变量

- 安装Hadoop

- 配置环境变量

- 配置集群

- 单点启动

- 配置ssh

- 群起并测试集群

编写集群分发脚本

scp(secure copy)安全拷贝

- scp可以实现服务器与服务器之间的数据拷贝,from server1 to server2

- 基本语法

- -r 递归

- $pdir/$fname 要拷贝的文件路径/名称

- $user@$host:$pdir/%fname 目标主机用户@主机:目标路径/名称

scp -r $pdir/$fname $user@$host:$pdir/%fname

scp -r $user@$host:$pdir/%fname $pdir/$fname - 案例实操

- 前提:hadoop102,hadoop103,hadoop104都已经创建好的/opt/moudle 和 /opt/software两个目录 并且目录所有者 chown -R atguigu:atguigu

rsync远程同步工具

sudo yum install -y rsync- rsync只对差异文件做更新,scp是把所有文件都复制过去

- 基本语法

- -a 归档拷贝

- -v 显示复制过程

rsync -av $pdir/$fname $user@$host:$pdir/%fnamexsync集群分发脚本

#!/usr/bin/env bash

#1.判断参数个数

if [ $# -lt 1 ]

then

echo Not Enough Argument!

exit;

fi

#2.遍历集群所有机器

for host in hadoop102 hadoop103 hadoop104

do

echo ========== $host ==========

#3.遍历所有目录,挨个发送

for file in $@

do

#4.判断文件是否存在

if [ -e $file ]

then

#5.获取父目录 -P 进入软链接真实路径

pdir=$(cd -P $(dirname $file); pwd)

#6.获取当前文件的名称 -p 如果文件夹存在不报错

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done- chmod 777 xsync

- 执行root权限文件同步

sudo ./xsync /etc/profile.d/myenv.sh

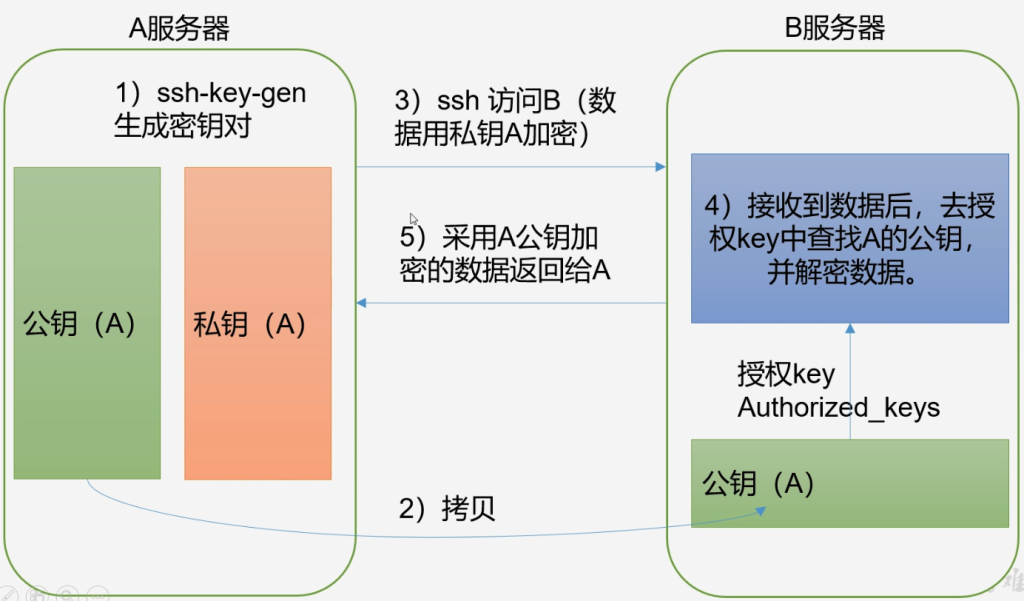

生成秘钥对

ssh-keygen -t rsa将公钥拷贝给hadoop103

ssh-copy-id hadoop103集群配置

| hadoop102 | hadoop103 | hadoop104 | |

| HDFS | NameNodeDataNode | DataNode | SecondaryNameNodeDataNode |

| YARN | NodeManager | ResourceManagerNodeManager | NodeManager |

- NameNode和SecondaryNameNode不要在同一台服务器

- ResourceManager也很消耗,不要和NameNode、SecondaryNameNode配置在同一台机器上

配置文件说明:

hadoop配置文件分两类:默认配置文件和自定义配置文件

- 默认配置文件

| 要获取的默认文件 | 文件存放在hadoop的jar包中的位置 |

| core-default.xml | hadoop-common-3.1.3/core-default.xml |

| hdfs-default.xml | hadoop-hdfs-3.1.3.jar/hdfs-default.xml |

| yarn-default.xml | hadoop-yarn-common-3.1.3.jar/yarn-default.xml |

| mapred-default.xml | hadoop-mapreduce-client-core-3.1.3/mapred-default.xml |

- 自定义配置文件路径$HADOOP_HOME/etc/hadoop文件夹下

- core-site.xml

- hdfs-site.xml

- mapred-site.xml

- yarn-site.xml

core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- 指定NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop102:8020</value>

</property>

<!-- 指定hadoop数据的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-3.1.3/data</value>

</property>

<!-- 配置HDFS网页登陆使用的静态用户为atguigu -->

<property>

<name>hadoop.http.staticuser.user</name>

<value>atguigu</value>

</property>

</configuration>hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- nn web访问地址 -->

<property>

<name>dfs.namenode.http-address</name>

<value>hadoop102:9870</value>

</property>

<!-- 2nn web访问地址 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop104:9868</value>

</property>

</configuration>yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- 指定MR走shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定ResourceManager的地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop103</value>

</property>

<!-- 环境变量的继承 -->

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- 指定MapReduce程序运行在Yarn上 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>在集群上分发配置好的hadoop配置文件

xsync /opt/module/hadoop-3.1.3/etc/hadoop/群起集群

- 配置workers

hadoop102

hadoop103

hadoop104- 分发workers

启动集群

- 如果集群是第一次启动,需要再hadoop102节点格式化NameNode

- 格式化NameNode会产生新的集群id,导致NameNode和DataNode的集群id不一致,集群找不到以往数据

- 如果集群在运行过程中报错,需要重新格式化NameNode,一定要先停止NameNode和DataNode进程,并且要删除所有机器的data和logs目录,然后再进行格式化

hdfs namenode -format如下:启动服务会检查namenode和datanode 版本号是否一致

/opt/module/hadoop-3.1.3/data/dfs/data/current/VERSION # 记录了集群ID等信息

#Sat Apr 10 15:20:58 CST 2021

namespaceID=1747190975

clusterID=CID-cd9774d9-d045-4e14-a9cb-db212d83b27c

cTime=1618039258618

storageType=NAME_NODE

blockpoolID=BP-869501456-192.168.10.102-1618039258618

layoutVersion=-64启动hdfs

sbin/start-dfs.sh在配置了ResourceManager的节点 hadoop103 启动YARN

sbin/start-yarn.sh- web端常看HDFS的NameNode

- http://hadoop102:9870

- 查看hdfs上存储的数据信息

- web端查看YARN的ResourceManager

- http://hadoop103:8088

- 查看YARN上运行的job信息

集群基本测试

- 上传文件

hadoop fs -mkdir /input

hadoop fs -put ~/wcinput/words.txt /input文件存储路径

/opt/module/hadoop-3.1.3/data/dfs/data/current/BP-869501456-192.168.10.102-1618039258618/current/finalized/subdir0/subdir0- 拼接

- cat 后追加数据到指定文件

cat blk_xxx >> tmp.tar.gz

cat blk_yyy >> tmp.tar.gz下载

hadoop fs -get /input/words.txt- 执行wordcount程序

- 集群模式 使用hdfs路径

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output集群崩溃处理方法

- 停止服务进程

sbin/stop-dfs.sh- 删除每个服务器上的data logs文件夹

- 格式化

hdfs namenode -format配置历史服务器

- 配置mapred-site.xml

<!-- 历史服务器端地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop102:10020</value>

</property>

<!-- 历史服务器web端地址 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop102:19888</value>

</property>分发配置

xsync mapred-site.xml- 在hadoop102上启动历史服务器

- 先重启yarn

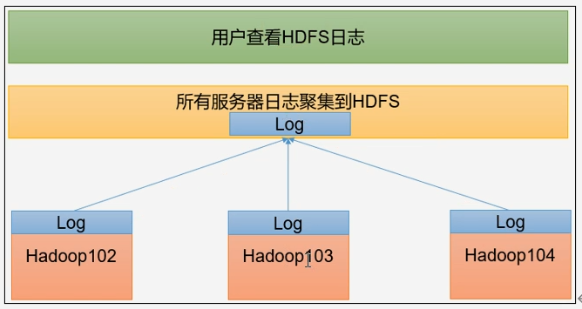

bin/mapred --daemon start historyserver配置日志的聚集

应用运行完成以后,将程序运行日志信息上传到HDFS系统上

注意:开启日志聚集功能,需要重启NodeManager、ResourceManager和HistoryServer

- 配置yarn-site.xml

<!-- 开启日志聚集功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 设置日志聚集服务器地址 -->

<property>

<name>yarn.log.server.url</name>

<value>http://hadoop102:19888/jobhistory/logs</value>

</property>

<!-- 设置日志保留时间为7天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>- 分发yan-site.xml

- 关闭nodemanager、resourcemanager和historyserver

bin/mapred --daemon stop historyserver

sbin/stop-yarn.sh启动服务

sbin/start-yarn.sh

bin/mapred --daemon start historyserver集群启动/停止方式总结

各个模块分开启动/停止(配置ssh是前提)常用

- 整体启动/停止HDFS

start-dfs.sh/stop-dfs.sh整体启动/停止YARN

start-yarn.sh/stop-yarn.sh各个服务组件逐一启动/停止

- 分别启动/停止HDFS组件

hdfs --daemon start/stop namenode/datanode/secondarynamenode启动/停止YARN

yarn --daemon start/stop resourcemanager/nodemanager编写hadoop集群常用脚本

hadoop集群启停脚本(包含HDFS、Yarn、Historyserver):myhadoop.sh

- vim ~/bin/myhadoop.sh

#!/usr/bin/env bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit;

fi

case $1 in

"start")

echo "========== 启动 hadoop集群 =========="

echo "---------- 启动 hdfs ----------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/sbin/start-dfs.sh"

echo "---------- 启动 yarn ----------"

ssh hadoop103 "/opt/module/hadoop-3.1.3/sbin/start-yarn.sh"

echo "---------- 启动 historyserver ----------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/bin/mapred --daemon start historyserver"

;;

"stop")

echo "========== 关闭 hadoop集群 =========="

echo "---------- 关闭 historyserver ----------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/bin/mapred --daemon stop historyserver"

echo "---------- 关闭 yarn ----------"

ssh hadoop103 "/opt/module/hadoop-3.1.3/sbin/stop-yarn.sh"

echo "---------- 关闭 hdfs ----------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac- chmod 777 myhadoop.sh

- 分发文件

查看三台服务器java进程脚本:jpsall

vim ~/bin/jpsall

#!/usr/bin/env bash

for host in hadoop102 hadoop103 hadoop104

do

echo ========== $host ==========

ssh $host jps

done- 分发文件

常用端口号

hadoop3.x

- HDFS

- NameNode内部通信端口

- 8020/9000/9820

- NameNode对用户的查询端口(web端口)

- 9870

- Yarn 查看任务运行情况端口

- 8088

- 历史服务器web端口

- 19888

- NameNode内部通信端口

hadoop2.x

- HDFS

- NameNode内部通信端口

- 8020/9000

- NameNode对用户的查询端口(web端口)

- 50070

- Yarn 查看任务运行情况端口

- 8088

- 历史服务器端口

- 19888

- NameNode内部通信端口

常用配置文件

- core-site.xml

- hdfs-site.xml

- yarn-site.xml

- mapred-site.xml

- workers

- slaves (hadoop2.x)

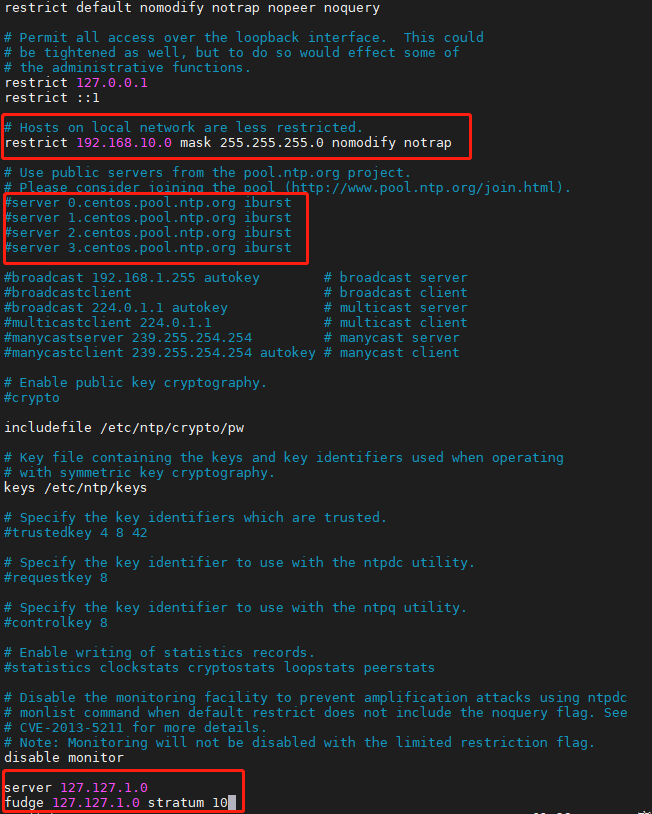

集群时间同步

如果服务器能连接外网就不需要时间同步

内网需要同步时间服务器:指定一个服务器时间为准

- 查看所有节点ntpd服务器状态和开机自启动状态

sudo yum -y install ntp

sudo systemctl status ntpd

sudo systemctl start ntpd

sudo systemctl is-enabled ntpd修改hadoop102的ntp.conf配置文件

- 修改1 允许访问时间服务器的网段

- 修改2 关闭外网时间同步地址

- 修改3 当该节点丢失网络连接 依然可以采用本地时间作为时间服务器为集群中其他节点提供时间同步

- 修改hadoop2 /etc/sysconfig/ntpd文件

sudo vim /etc/sysconfig/ntpd

# 追加内容 让硬件时间与系统时间一起同步

SYNC_HWCLOCK=yes重启ntpd服务

sudo systemctl restart ntpd设置ntpd开机启动

sudo systemctl enable ntpd配置hadoop103 hadoop104周期性的时间同步

- 关闭hadoop103 hadoop104节点上ntp服务和自启动

sudo systemctl stop ntpd

sudo systemctl disable ntpd配置1分钟时间服务器同步一次

sudo crontab -e

# 定时任务

*/1 * * * * /usr/sbin/ntpdate hadoop102修改hadoop102时间

sudo date -s "2021-01-01 00:00:00"一分钟后看时间同步情况